Want to market Nazi memorabilia, or recruit marchers for a far-right rally? Facebook’s self-service ad-buying platform had the right audience for you.

Until this week, when we asked Facebook about it, the world’s largest social network enabled advertisers to direct their pitches to the news feeds of almost 2,300 people who expressed interest in the topics of “Jew hater,” “How to burn jews,” or, “History of ‘why jews ruin the world.’”

To test if these ad categories were real, we paid $30 to target those groups with three “promoted posts” — in which a ProPublica article or post was displayed in their news feeds. Facebook approved all three ads within 15 minutes.

After we contacted Facebook, it removed the anti-Semitic categories — which were created by an algorithm rather than by people — and said it would explore ways to fix the problem, such as limiting the number of categories available or scrutinizing them before they are displayed to buyers.

“There are times where content is surfaced on our platform that violates our standards,” said Rob Leathern, product management director at Facebook. “In this case, we’ve removed the associated targeting fields in question. We know we have more work to do, so we’re also building new guardrails in our product and review processes to prevent other issues like this from happening in the future.”

Facebook’s advertising has become a focus of national attention since it disclosed last week that it had discovered $100,000 worth of ads placed during the 2016 presidential election season by “inauthentic” accounts that appeared to be affiliated with Russia.

Like many tech companies, Facebook has long taken a hands off approach to its advertising business. Unlike traditional media companies that select the audiences they offer advertisers, Facebook generates its ad categories automatically based both on what users explicitly share with Facebook and what they implicitly convey through their online activity.

Traditionally, tech companies have contended that it’s not their role to censor the Internet or to discourage legitimate political expression. In the wake of the violent protests in Charlottesville by right-wing groups that included self-described Nazis, Facebook and other tech companies vowed to strengthen their monitoring of hate speech.

Facebook CEO Mark Zuckerberg wrote at the time that “there is no place for hate in our community,” and pledged to keep a closer eye on hateful posts and threats of violence on Facebook. “It’s a disgrace that we still need to say that neo-Nazis and white supremacists are wrong — as if this is somehow not obvious,” he wrote.

But Facebook apparently did not intensify its scrutiny of its ad buying platform. In all likelihood, the ad categories that we spotted were automatically generated because people had listed those anti-Semitic themes on their Facebook profiles as an interest, an employer or a “field of study.” Facebook’s algorithm automatically transforms people’s declared interests into advertising categories.

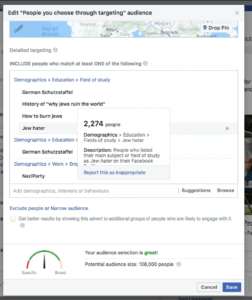

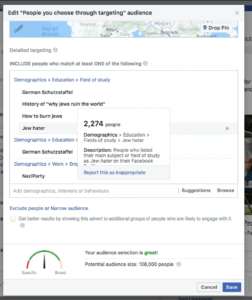

Here is a screenshot of our ad buying process on the company’s advertising portal:

This is not the first controversy over Facebook’s ad categories. Last year, ProPublica was able to block an ad that we bought in Facebook’s housing categories from being shown to African-Americans, Hispanics and Asian-Americans, raising the question of whether such ad targeting violated laws against discrimination in housing advertising. After ProPublica’s article appeared, Facebook built a system that it said would prevent such ads from being approved.

Last year, ProPublica also collected a list of the advertising categories Facebook was providing to advertisers. We downloaded more than 29,000 ad categories from Facebook’s ad system — and found categories ranging from an interest in “Hungarian sausages” to “People in households that have an estimated household income of between $100K and $125K.”

At that time, we did not find any anti-Semitic categories, but we do not know if we captured all of Facebook’s possible ad categories, or if these categories were added later. A Facebook spokesman didn’t respond to a question about when the categories were introduced.

Last week, acting on a tip, we logged into Facebook’s automated ad system to see if “Jew hater” was really an ad category. We found it, but discovered that the category — with only 2,274 people in it — was too small for Facebook to allow us to buy an ad pegged only to Jew haters.

Facebook’s automated system suggested “Second Amendment” as an additional category that would boost our audience size to 119,000 people, presumably because its system had correlated gun enthusiasts with anti-Semites.

Instead, we chose additional categories that popped up when we typed in “jew h”: “How to burn Jews,” and “History of ‘why jews ruin the world.’” Then we added a category that Facebook suggested when we typed in “Hitler”: a category called “Hitler did nothing wrong.” All were described as “fields of study.”

These ad categories were tiny. Only two people were listed as the audience size for “how to burn jews,” and just one for “History of ‘why jews ruin the world.’” Another 15 people comprised the viewership for “Hitler did nothing wrong.”

Facebook’s automated system told us that we still didn’t have a large enough audience to make a purchase. So we added “German Schutzstaffel,” commonly known as the Nazi SS, and the “Nazi Party,” which were both described to advertisers as groups of “employers.” Their audiences were larger: 3,194 for the SS and 2,449 for Nazi Party.

Still, Facebook said we needed more — so we added people with an interest in the National Democratic Party of Germany, a far-right, ultranationalist political party, with its much larger viewership of 194,600.

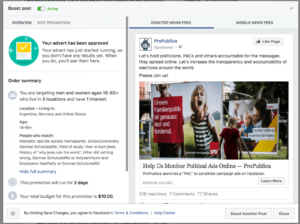

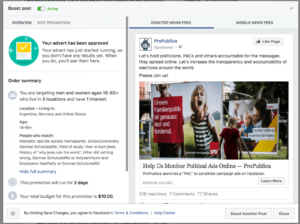

Once we had our audience, we submitted our ad — which promoted an unrelated ProPublica news article. Within 15 minutes, Facebook approved our ad, with one change. In its approval screen, Facebook described the ad targeting category “Jew hater” as “Antysemityzm,” the Polish word for anti-Semitism. Just to make sure it was referring to the same category, we bought two additional ads using the term “Jew hater” in combination with other terms. Both times, Facebook changed the ad targeting category “Jew hater” to “Antisemityzm” in its approval.

Here is one of our approved ads from Facebook:

A few days later, Facebook sent us the results of our campaigns. Our three ads reached 5,897 people, generating 101 clicks, and 13 “engagements” — which could be a “like” a “share” or a comment on a post.

Since we contacted Facebook, most of the anti-Semitic categories have disappeared.

Facebook spokesman Joe Osborne said that they didn’t appear to have been widely used. “We have looked at the use of these audiences and campaigns and it’s not common or widespread,” he said.

We looked for analogous advertising categories for other religions, such as “Muslim haters.” Facebook didn’t have them.

Update, Sept. 14, 2017: This story has been updated to include the Facebook spokesman’s name.

You need to be a supporter to comment.

There are currently no responses to this article.

Be the first to respond.